Designing Trust at Scale.

A Human-Centered Framework for Explainable AI

Designing for Trust

The exponential growth of AI within the Workday platform from financial forecasting to talent management created a strategic imperative: We could not scale AI adoption without first scaling user trust.

The challenge was profound. As Workday moved toward complex intelligent features, users were increasingly asked to act on suggestions, predictions, and recommendations generated by opaque algorithms. The failure to explain why an AI produced a specific output created systemic risk from user confusion and rejection to potential legal and ethical liability under emerging regulation.

This project was driven by three core strategic forces:

User Confidence: We needed to understand what information users require to confidently and responsibly act on AI outputs.

Regulatory Compliance: We had to proactively address the coming EU AI Act, specifically the deadlines for Transparency Risk AI obligations (August 2, 2026).

Scalability: Existing AI teams were pursuing fragmented, one-off solutions. We required a unified, platform-level design standard to make the abstract concept of Explainable AI (XAI) accessible to everyone.

My Role & Foundational Impact

As the Principal UX Designer, my role was that of a strategic leader defining the problem, driving the research methodology, and translating the findings into a standard that could be adopted across the entire platform.

Strategic Influence

Strategic Leadership: Highlighted the risk of fragmented XAI efforts, unifying the work of the Research, Design, and Engineering platform teams.

Synthesizing Complexity: Transformed complex academic and legal requirements (like the EU AI Act) into simple, structured design criteria.

Evangelism: Presented the framework to leadership and was responsible for publishing the guidelines on our Canvas Design system to be adopted by product teams globally.

The Goal:

My goal was to lead the research and design of a comprehensive, evidence-based design framework for AI explainability. This framework needed to make complex concepts accessible to hundreds of designers, product managers, and developers, ensuring every new AI feature fostered trust and provided transparency from day one.

The Solution: A Collaborative, Research-Driven Framework

I led a research and design programme that successfully translated rigorous academic principles into a set of practical, scalable design heuristics, a pattern library, and educational tools.

Academic Rigor and Collaboration

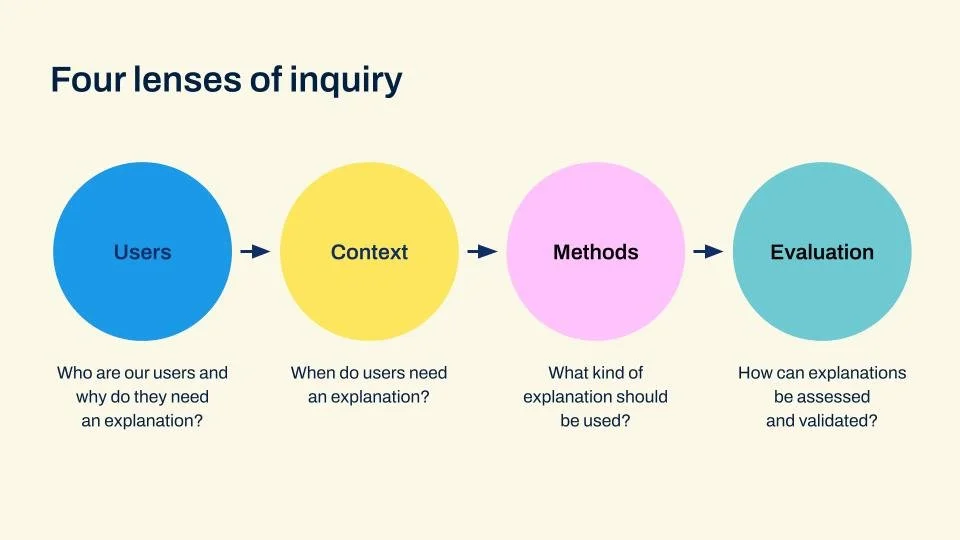

The foundation of the framework was built upon extensive secondary research and anchored by critical collaboration with AI practitioners from Workday and the industry at large. To ensure the framework was both ethical and user-centered, we partnered directly with PhD research fellows from the T.U.D. (Technological University Dublin) Ethics and AI Centre. This collaboration was essential in bridging the gap between academic XAI theory and the practical application of enterprise SaaS design.

Making XAI Accessible

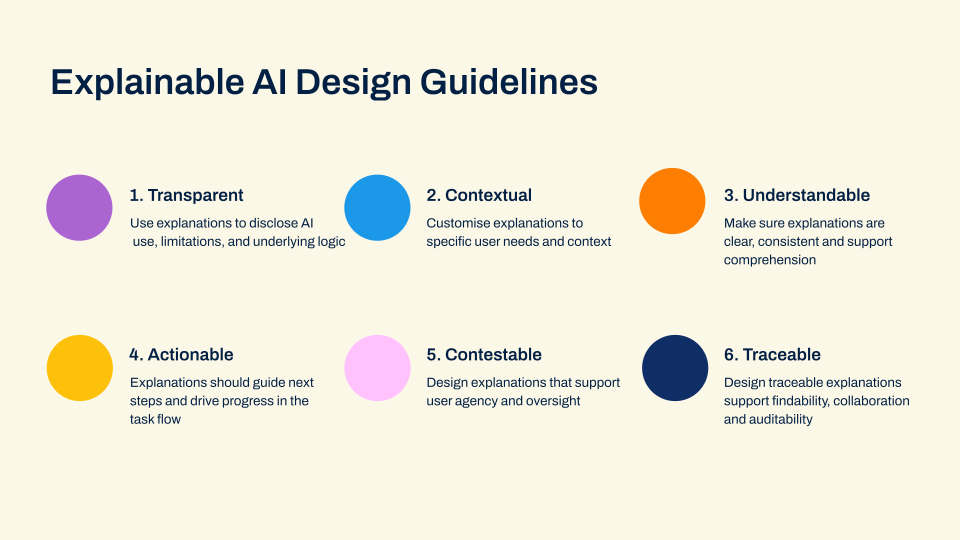

The resulting framework moves beyond simple badges and disclaimers. It is structured around six core heuristics—principles that transform the abstract requirements of explainability into actionable design criteria.

Core Heuristic

Transparent

Contestable

Actionable

Traceable

The Design Goal

Visibly disclose the use of AI and its limitations.

Provide the user with agency and oversight over outputs.

Guide the user’s next steps to drive task completion.

Support auditability and accountability for AI decisions.

Design Example

AI Generated Badge and clear, persistent disclaimers.

Clear feedback loops and counterfactual "what-if" scenarios for challenging AI generated recommendations.

Progressive disclosure of explanations, providing details only when needed.

Activity streams and version history logs for collaborative agentic tasks.

To make these concepts accessible, the final guidelines include a Design Audit of existing patterns, a Starter Toolkit, and the Explainable AI Diagnostic Worksheet a practical guide for teams to identify a user’s specific explainability needs based on the questions they ask.

Founding the AI Center of Excellence

This project delivered a foundational element for Workday's strategic growth and innovation in Europe. The AI Explainability Guidelines were officially adopted as a Design standard for the new Workday AI Centre of Excellence (AI CoE) in Dublin, announced in October 2025 as part of a €175 million investment by the Irish government and the creation of 200 specialised AI roles to accelerate European innovation

Workday Announces New AI Centre of Excellence in Dublin to Accelerate European Innovation.

The guidelines ensure that all future AI development emanating from the CoE is built with trust and responsibility at the core.